This post shows how to host your working Local Gradio App on HuggingFace.

This post is part of a series:

Part 1: Create Learner (.pkl file)

Part 2: Create Gradio application file (app.py)

Part 3: Host on HuggingFace account

Part 3: Host on HuggingFace account

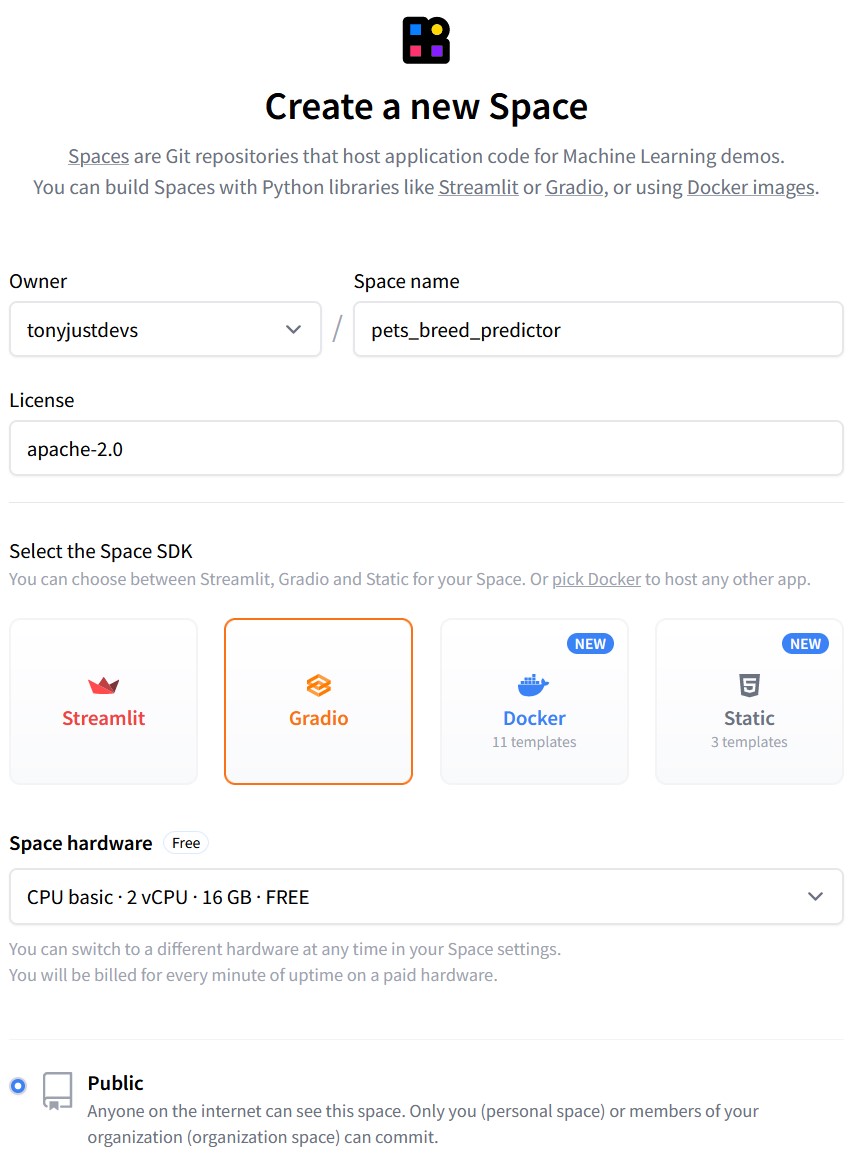

1 Create HuggingFace Account and Create a ‘Space’

- Choose your Space name

- Choose Apache-2.0 to avoid any copyright issues

- Choose Gradio

- Choose the Free option

- Choose Public (show you can show it to the world!)

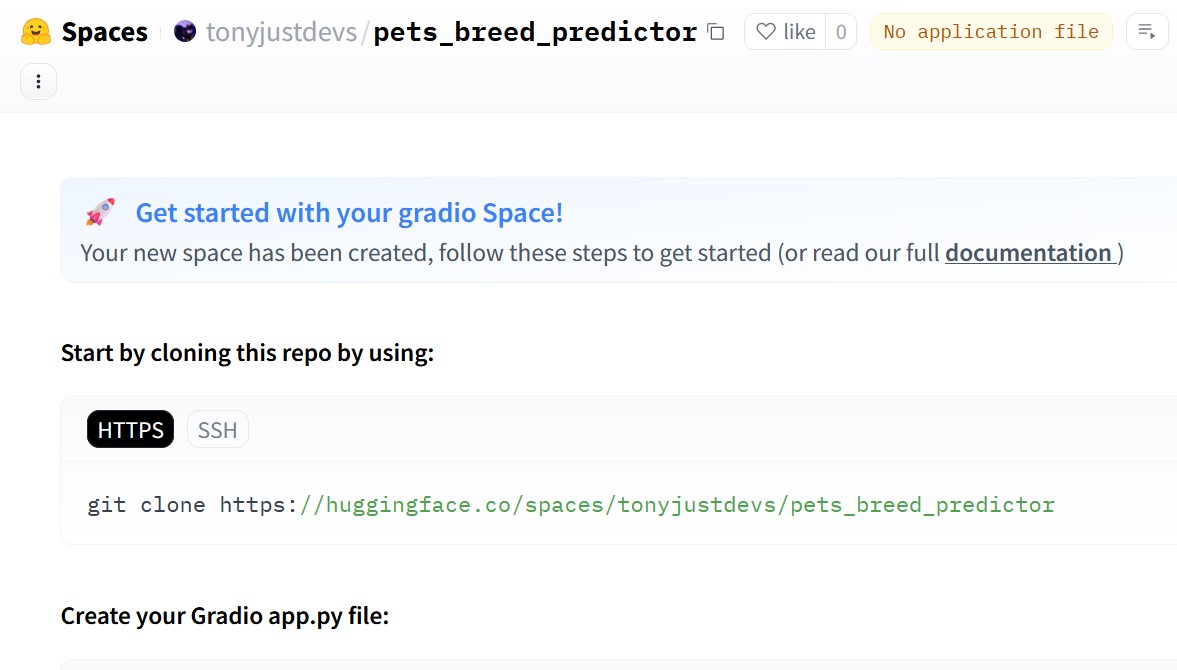

2 Clone the repo

This will create allow us deploy the Gradio app to the HuggingFace repository:

git clone https://huggingface.co/spaces/tonyjustdevs/pets_breed_predictor

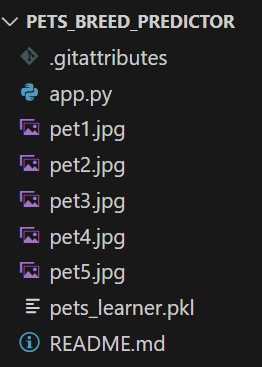

2. Gather your files

Recall the various files we needed to run the app locally part 2.

Gather into the cloned huggingface folder:

- Learner (.pkl)

- Pet examples (pets.jpg)

- Gradio app (app.py)

A good way to check for me is seeing the pets_breed_predictor is the git folder and huggingface space.

3. Push to HuggingFace Repo

If you’ve pushed succesfully your app (could take several minutes), then your app is live! Congrats!

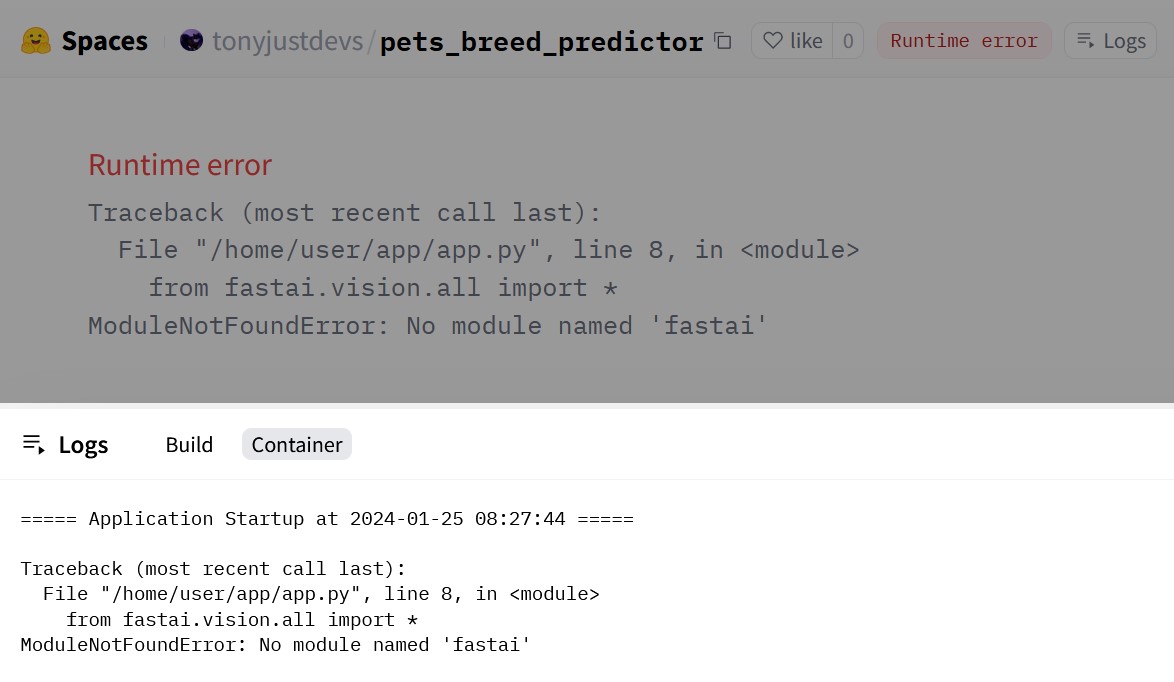

If you’re like me and forgot to include the requirements.txt then you’ll be greeted with this error.

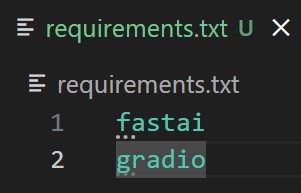

3.1 Add the requirements.txt

We imported two libraries fastai and gradio so include them in the requirements.txt file. Commit and push.

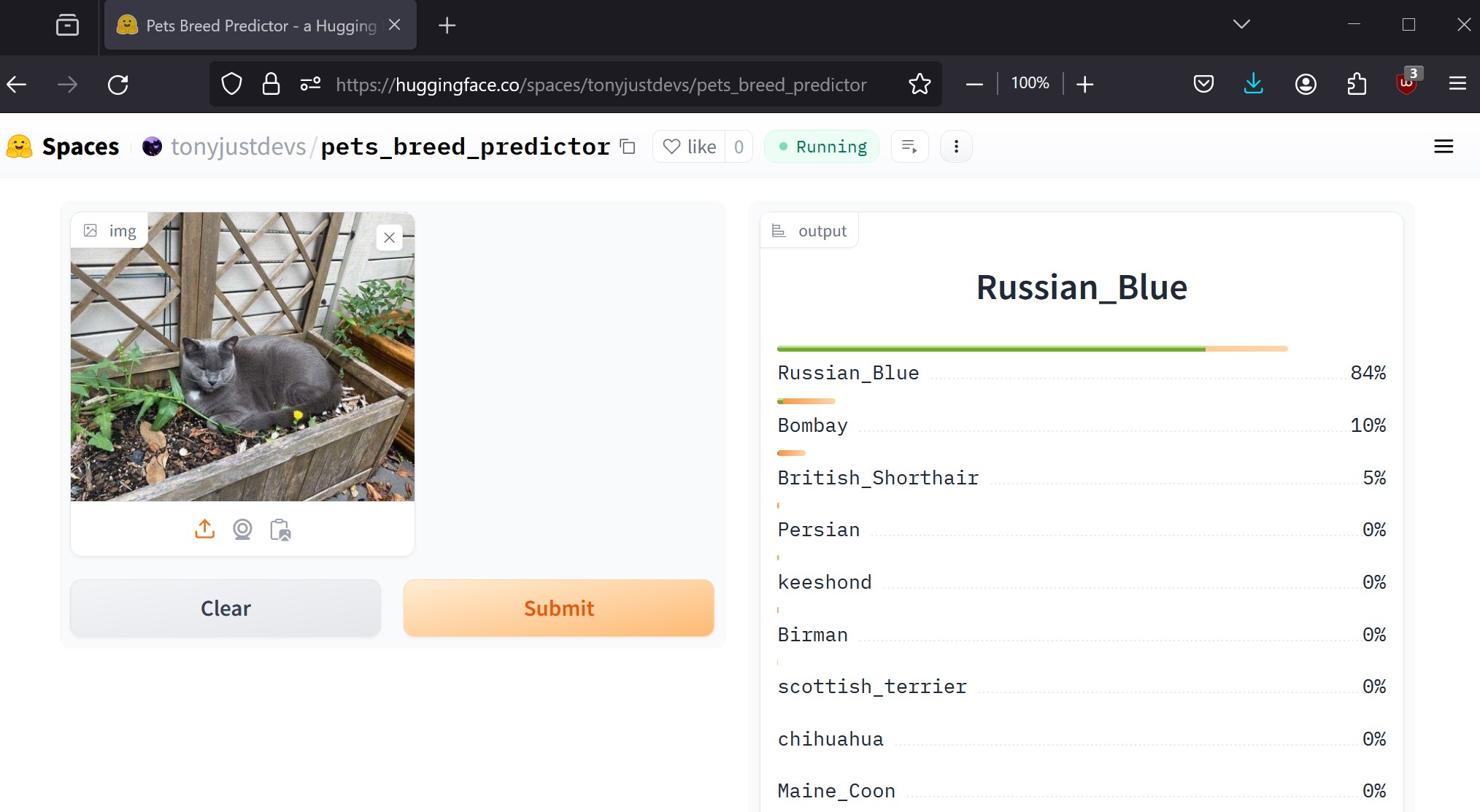

4 Web App Complete and is Live

If all goes well, the HuggingFace space is hosting the Gradio App!

Check out my web app here!