!pip install -Uqq fastai

from fastai.vision.all import *

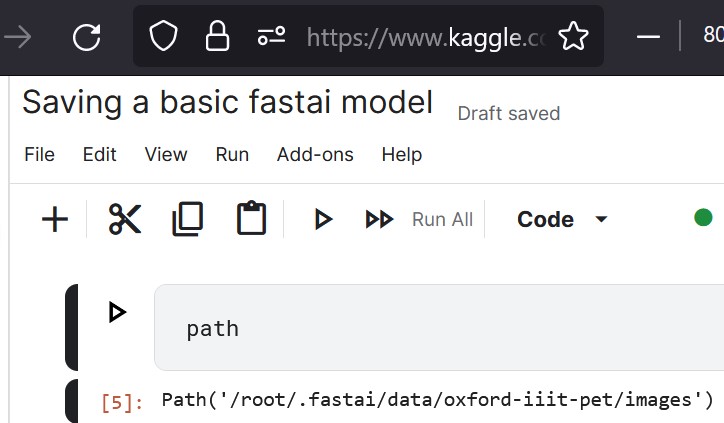

This is a short tutorial to save (export) down a fast ai model (pkl file).

1. Load Fast AI Libaries and Download Dataset

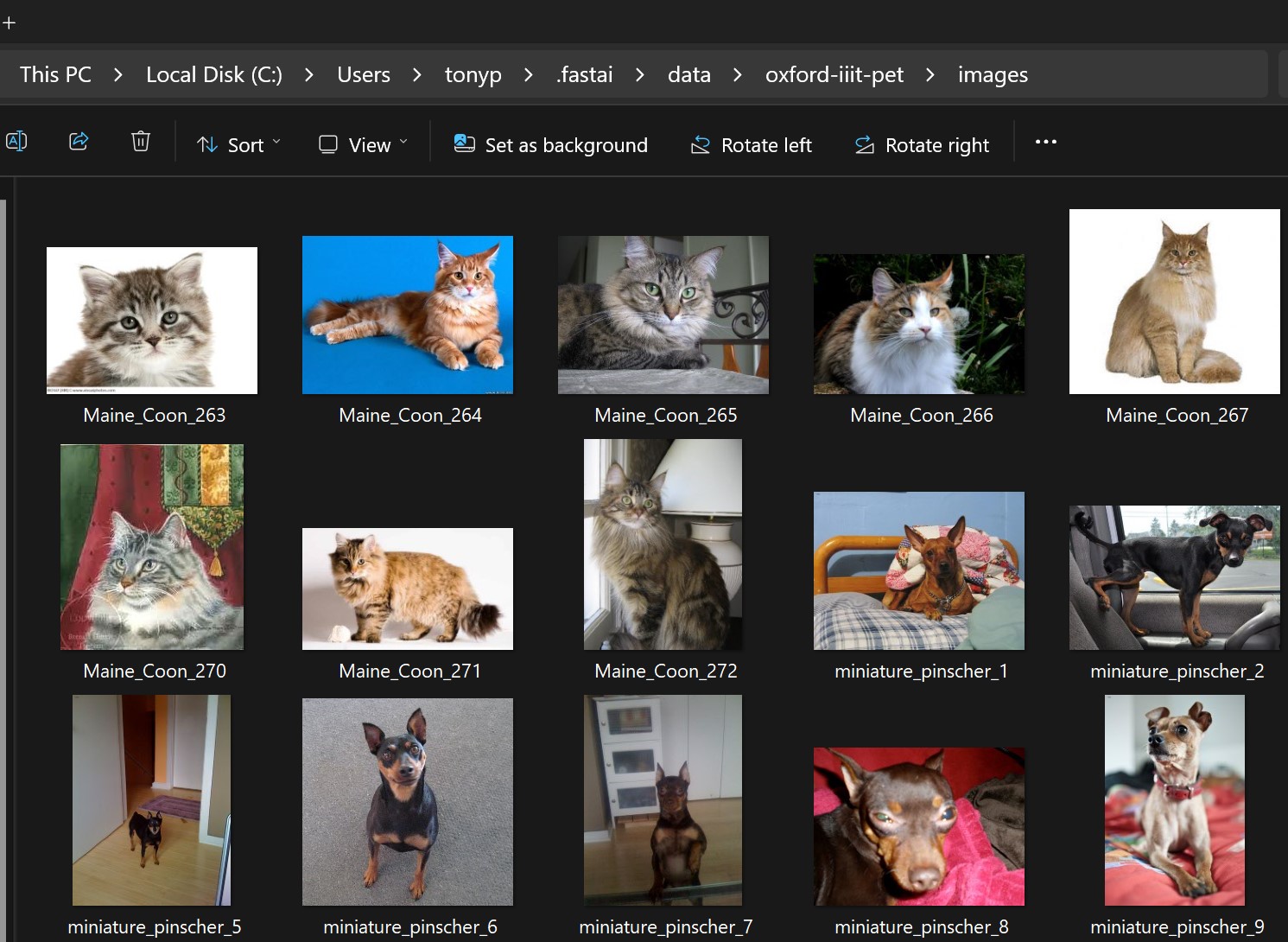

path = untar_data(URLs.PETS)/'images'pathPath('C:/Users/tonyp/.fastai/data/oxford-iiit-pet/images')If you ran it in GoogleColab or Kaggle (recommended, its faster) then it’ll be stored in the cloud.

If you ran it locally, its stored on your machine and you can take a look at the all the cute images! (Not recommended, its slow)

2. Labelling Function

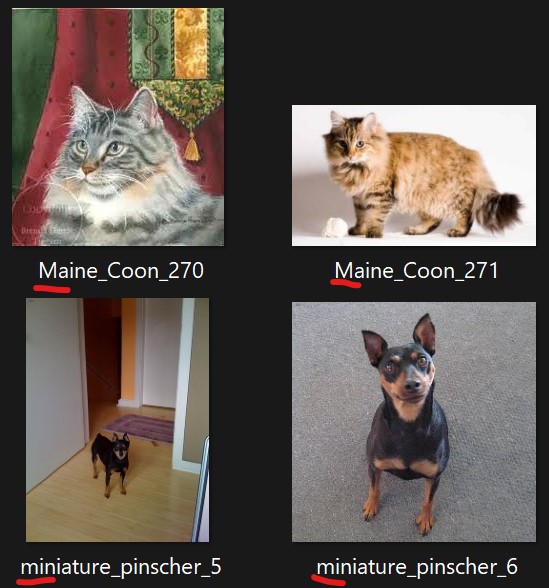

def is_cat(x): return x[0].isupper()Our data must be consistently labelled and parsed through into the model.

For this particular dataset, filenames starting with a Capital letter denotes a Cat, vice versa for a Non-Cat (Dog, in this case).

Lets write a function to handle the files names to get our labels (psuedo-code):

1. Parse in file name and

2. Obtain the first character and

3. Check whether it is an upper case,

4. If True, then it is a Cat.

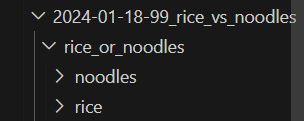

There are various ways for us to supply the labelling to our model, in a previous blog Rice vs Noodles, the label was supplied via the parent folders name (rice folder and noodle folder).

Fast AI provides various helpful functions for common ways data is labelled to parse into our models

3. DataLoader

Create the Dataloader and supply the labelling function we wrote into label_func.

dls = ImageDataLoaders.from_name_func('.',

get_image_files(path), valid_pct=0.2, seed=42,

label_func=is_cat,

item_tfms=Resize(192))c:\Users\tonyp\miniconda3\envs\fastai\Lib\site-packages\fastai\torch_core.py:263: UserWarning: 'has_mps' is deprecated, please use 'torch.backends.mps.is_built()'

return getattr(torch, 'has_mps', False)4. Fine-tune (Non-GPU vs GPU)

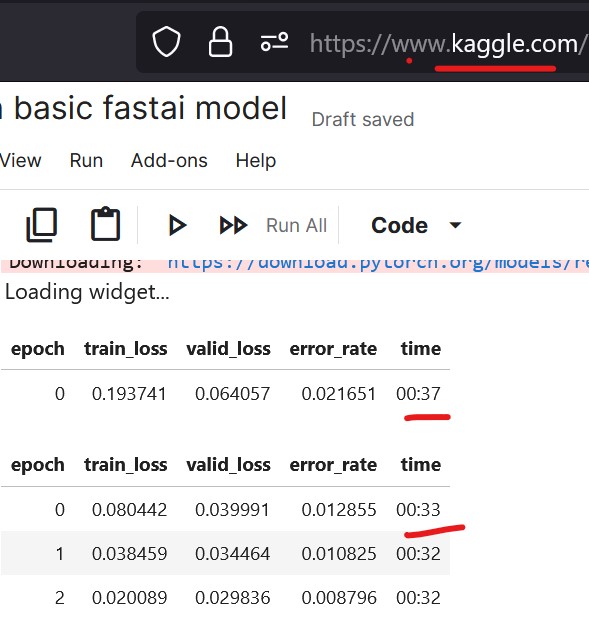

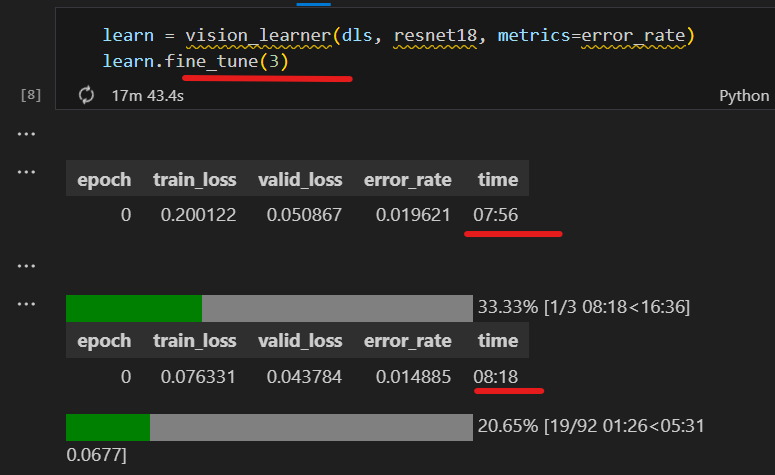

I attempted to fine-tune via Kaggle (GPU) and Locally (No GPU) and not suprisingly it is incredibly faster with a GPU setup.

Nvidia (and maybe other) GPUs are designed to be able to take multiple images at once (batches) grouped together (tensors) (I think 64 images at once), whereas a laptop without a GPU like mine will be processing 1 image at a time.

learn = vision_learner(dls, resnet18, metrics=error_rate)

learn.fine_tune(3)GPU took 35 seconds an epoch

Non-GPU took 8 minutes an epoch

5. Export the model

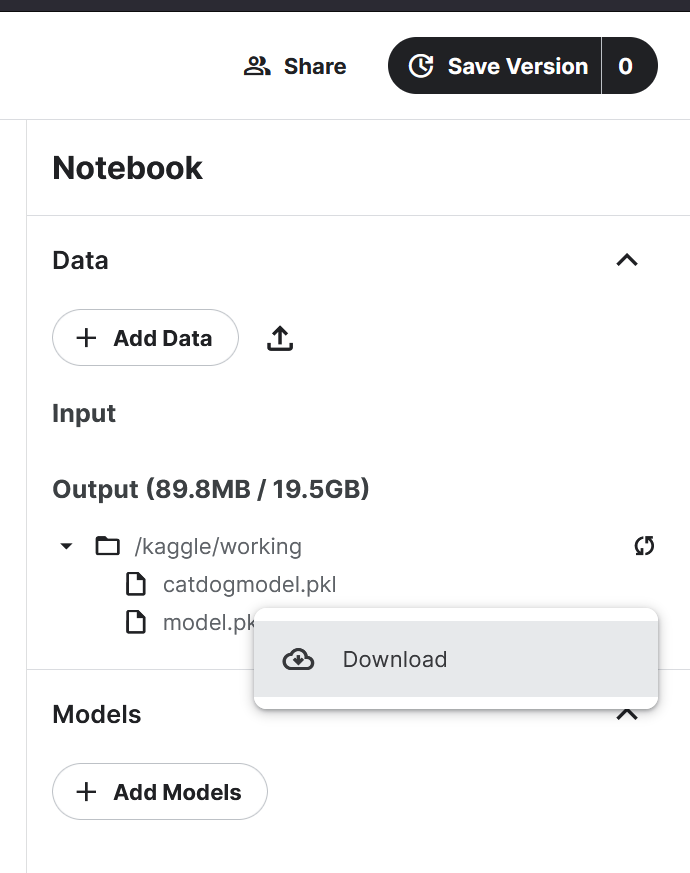

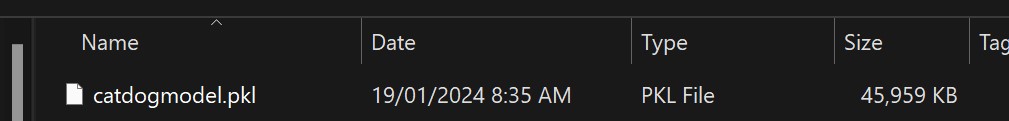

learn.export('catdogmodel.pkl')In Kaggle, the model will be saved on their cloud and you can access it by using right-hand sidebar under Notebook -> Data -> Output

It’s only 46 Mb!, not too shabby!

Thats it! We’ll go through how to use a saved/exported model in an upcoming post.